Graph Represeantion Learning

Reading List

- A Gentle Introduction to Graph Neural Networks

- Easy to read and understand 10/10

- A Survey on Graph Representation Learning Methods

Overview

Graph representation learning aims to create graph representation vectors that accurately capture the structure and features of large graphs, which is crucial for downstream tasks like node classification, link prediction, and anomaly detection. The field has seen significant growth, with methods divided into traditional graph embedding techniques and graph neural network (GNN)-based approaches. These techniques apply to both static (fixed) and dynamic (evolving) graphs.

Introduction

Graphs are powerful data structure (social networks, financial transactions and biological networks).

- social networks, people are the nodes and thir friendships constitute the edges.

- financial transactions, the nodes and edges could be people and their money transactions.

How to represent graphs? Adjacency matrix, however, dimensionality of the adjacency matrix is often very high, and feature extraction based methods are time consuming and may not represent all the necessary information in the graphs.

Graph embedding methods have been very successful in graph representation. These methods project the graph elements (such as nodes, edges and subgraphs) to a lower dimensional space and preserve the properties of graphs.

- traditional graph embedding

- random walks, factorization embedding methods and non-GNN based deep learning→ static graph and dynamic graph

- GNN based graph embedding methods; node embeddings are obtained by aggregating the embeddings of the node’s neighbors.

- early works based on rnn, later convolutional graph neural nets were developed based on the convolution operation

- spatial-temporal GNNs and dynamic GNNs leverage the strengths of GNNs in evolving networks.

What we will cover? 1. Basic knowledge on Graphs, 2. Traditional node embedding methods for static and dynamic graphs, 3. Static, Spatial-temporal and dynamic GNN, 4. limitation of GNNs.

Graphs

A graph can be directed or undirected. In a directed graph, an edge \(e_{k}=\left(v_{i}, v_{j}\right)\) has a direction with \(v_{i}\) being the starting vertex and \(v_{j}\) the ending vertex. Graphs can be represented by their adjacency, degree and Laplacian matrices, which are defined as follows:

Graph Embedding

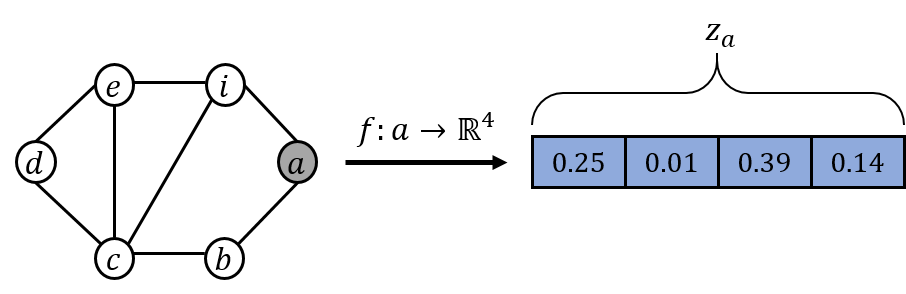

In order to use graphs in downstream machine learning and data mining applications, graphs and their entities such as nodes and edges need to be represented using numerical features.

- graph embedding methodds have been proposed, which study the issue of automatically generating representation vectors for the graphs.

- these methods formulate the graph representation learning as a machine learning task and generate embedding vectors leveraging the structure and properties of the graph as input data.

Graph embedding techniques include node, edge and subgraph embedding techniques, which are defined as follows

An embedding vector for the edge \(\left(v_{i}, v_{j}\right)\) can be obtained by applying a binary operation such as hammard product, mean, weighted-L1 and weighted-L2 on the two node embedding vectors \(z_{i}\) and \(z_{j}\).

A subgraph embedding vector is usually created by aggregating the embeddings of the nodes in the subgraph using aggregators such as a mean operator. Almost all the graph embedding techniques developed so far are node embedding techniques.

Graph Embedding Applications

Node Classification. Node classification task assigns a label to the nodes in the test dataset. This task has many applications in different domains. For instance, in social networks, a person’s political affiliation can be predicted based on his friends in the network. In node classification, each instance in the training dataset is the node embedding vector and the label of the instance is the node label. Different regular classification methods such as Logistic Regression and Random Forests can be trained on the training dataset and generate the node classification scores for the test data. Similarly, Graph classification can be performed using graph embedding vectors.

Link Prediction. Link prediction is one of the important applications of node embedding methods. It predicts the likelihood of an edge formation between two nodes. Examples of this task include recommending friends in social networks and finding biological connections in biological networks. Link prediction can be formulated as a classification task that assigns a label for edges. Edge label 1 means that an edge is likely to be created between two nodes and the label is 0 otherwise. For the training step, a sample training set is generated using positive and negative samples. Positive samples are the edges the exist in the graph. Negative samples are the edges that do not exist and their representation vector can be generated using the node vectors. Similar to node classification, any classification method can be trained on the training set and predict the edge label for test edge instances.

Anomaly Detection. Anomaly detection is another application of node embedding methods. The goal of anomaly detection is to detect the nodes, edges, or graphs that are anomalous the time that anomaly occurs. Anomalous nodes and graphs deviate from normal behavior.

- For instance, in banks’ transaction networks, people who suddenly send or receive large amounts of money or create lots of connections with other people could be potential anomalous nodes.

An anomaly detection task can be formulated as a classification task such that each instance in the dataset is the node representation and the instance label is 0 if the node is normal and 1 if the node is anomalous. This formulation needs that we have a dataset with true node labels. One of the issues in anomaly detection is the lack of datasets with true labels.

- An alleviation to this issue in the literature is generating synthetic datasets that model the behaviors of real world datasets.

Another way to formulate the anomaly detection problem, especially in dynamic graphs, is viewing the problem as a change detection task. In order to detect the changes in the graph, one way is to compute the distance between the graph representation vectors at consecutive times. The time points that the value of this difference is far from the previous normal values, a potential anomaly has occurred.

Other graph embedding techniques includes graph clustering and visualization.